「Talking Head Anime from a Single Image」

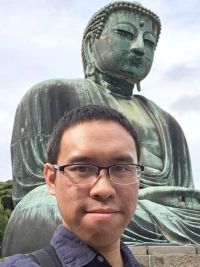

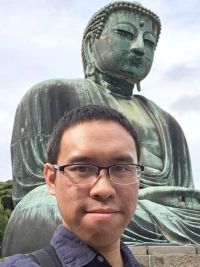

「Pramook Khungurn」

「表情模仿」

「Talking Head Anime from a Single Image」

「Pramook Khungurn」

「表情模仿」

「Talking Head Anime from a Single Image」

「Pramook Khungurn」

「表情模仿」

「Talking Head Anime from a Single Image」

「Pramook Khungurn」

「表情模仿」

文章來源: Talking Head Anime from a Single Image

Fascinated by virtual YouTubers, I put together a deep neural network system that makes becoming one much easier. More specifically, the network takes as input an image of an anime character's face and a desired pose, and it outputs another image of the same character in the given pose. What it can do is shown in the video below:

I also connected the system to a face tracker. This allows the character to mimic my face movements: I can also transfer face movements from existing videos:

Recently, Gwern, a freelance writer,

Sizigi Studios, a San Francisco game developer, opened WaifuLabs, a website that allows you to customize a GAN-generated female character and buy merchandise featuring her.

Everything seems to point to the future where artificial intelligence is an important tool for anime creation, and I want to take part in realizing it. In particular, how can I make creating anime easier with deep learning? It seems that the lowest hanging fruit is creating VTuber contents. So, since early 2019, I embarked on the quest to answer the following question:

So how do you become a VTuber to begin with? You need a character model whose movement can be controlled.

You need a character model whose movement can be controlled.

Being able to do so would make it much easier to become a VTuber.

This would be a boon to not only someone who cannot draw like me, but also a benefit to artists: they can draw and get the character to move immediately without modeling.

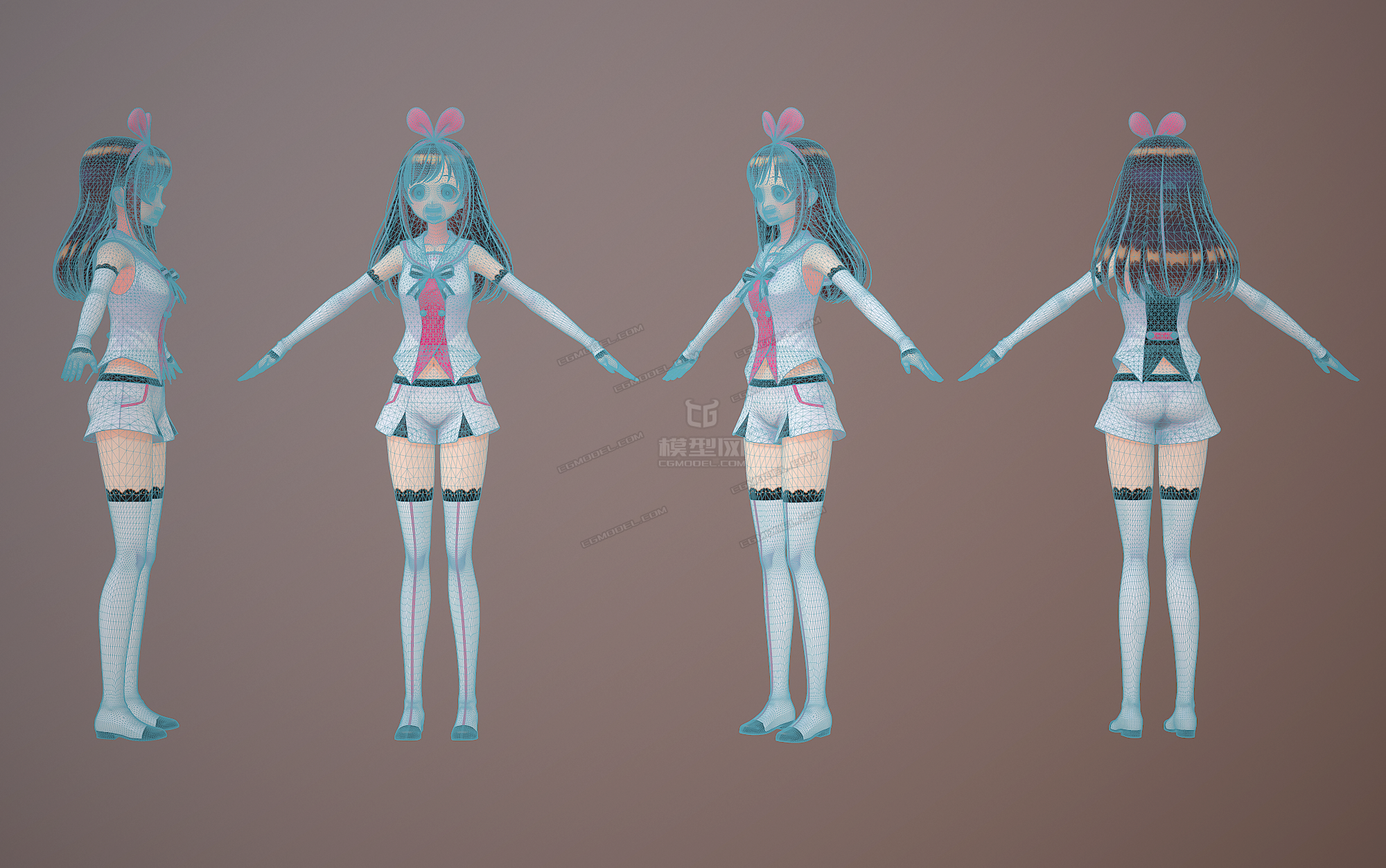

I'm trying to solve is this: given

generate another image of the same character such that its face is changed according to the pose.

I took advantage of the fact that there are ten of thousands of downloadable 3D models of anime characters, created for a 3D animation software called MikuMikuDance. I downloaded about 8,000 models and used them to render anime faces under random poses.

I decompose the process into two steps.

Let us call the first network the face morpher, and the second the face rotator.

| Models | Results | Hallucinate (幻覺的) Disoccluded (被排除) Pparts |

|---|---|---|

| Pumarola et al. | blurry | Yes |

| Zhou et al. (Appearance Flow) | sharp | No |

The image is of size 256×256, has RGBA format, and must have a transparent background.

More specifically, pixels that do not belong to the character must have the RGBA value of (0,0,0,0), and those that do must have non-zero alpha values.

The character's head must be looking straight in the direction perpendicular to the image plane. The head must be contained in the center 128×128 box, and the eyes and the mouth must be wide open.

(The network can handle images with eyes and mouth closed as well. However, in such a case, it cannot open them because there's not enough information on what the opened eyes and mouth look like.)

In 3D character animation terms, the input is the rest pose shape to be deformed.

The three other components control how the head is rotated.

In 3D animation terms, the head is controlled by two "joints," connected by a "bone."

In the skeleton of the character, the tip is a child of the root. So, a 3D transformation applied to the root would also affect the tip, but not the other way around.

I created a training dataset by rendering 3D character models. While 3D renderings are not the same as drawings, they are much easier to work with because 3D models are controllable. I can come up with any pose, apply it to a model, and render an image showing exactly that pose. Moreover, a 3D model can be used to generate hundreds of training images, so I only need to collect several thousand models.

I use models created for a 3D animation software called MikuMikuDance (MMD). The main reason is that there are tens of thousands of downloadable models of anime characters. I am also quite familiar with the file format because I used MMD models to generate training data for one of my previous research papers. Over the years, I have developed a library to manipulate and render the models, and it has allowed me to automate much of the data generation process.

To create a training dataset, I downloaded around 13,000 MMD models from websites such as

I also found models by following links from

Downloading alone took about two months.

The raw model data are not enough to generate training data. In particular, there are two problems.

The first problem is that I did not know exactly where each model's head was. I need to know this because the input specification requires that the head be contained in the middle 128 ×128 box of the input image. So, I created a tool that allowed me to annotate each model with the y-position of the bottom and the top of the head. The bottom corresponds to the tip of the chin, but the top does not have a precise definition. I mostly set the top so that the whole skull and the flat portion of hair that covers it are included in the range, arbitrarily excluding hair that pointed upward. If the character wears a hat, I simply guessed the location of the head's top. Fortunately, the positions do not have to be precise for a neural network to work. You can see the tool in action in the video below:

The second problem is that I did not know how to exactly control each model's eyes. Facial expressions of MMD models are implemented with "morphs" (aka blend shapes). A morph typically corresponds a facial feature being deformed in a particular way. For example, for most models, there is a morph corresponding to closing both eyes and another corresponding to opening the mouth as if to say "ah."

To generate the training data, I need to know the names of three morphs:

The last one is named "あ" in almost all models, so I did not have a problem with it.

The situation is more difficult with the eye-closing morphs. Different modelers name them differently, and one or both of them might be missing from some models.

I created a tool that allowed me to cycle through the eye controlling morphs and mark ones that have the right semantics. You can see a session of me using the tool in the following video.

You can see in the video that I collected 6 morphs instead of 2.

The reason is that MMD models generally come with two types of winks. Normal winks have eyelids curved downward, and smile winks have eyelids curved upward, resulting in a happy look.

Moreover, for each type of wink, there can be three different morphs: one that closes the right eye, one that closes the left, and one that closes both.

At the point of data annotation, I was not sure which type of wink and morph to use, so I decided to collect them all. In the end, I decided to use only the normal winks (眨眼) because more models have them. While it seems that morphs that close both eyes are superfluous, some models do not have any morphs that close only one eye.

Annotating the models, including developing tools to do so, took about 4 months. It was the most time consuming part of the project.

To generate a training image, I decide on a model and a pose. I rendered the posed model using an orthographic projection so that the y-positions of the top and bottom of the head (obtained through manual annotation in Section 5.1) corresponds to the middle 128-pixel vertical strip of the image. The reason for using the orthographic projection rather than the perspective projection is that drawings, especially of VTubers, do not seem to have foreshortening effects.

Rendering a 3D model requires specifying the light scattering properties of the model's surface. MMD generally uses toon shading, but I used a more standard Phong reflection model because I was too lazy to implement toon shading. Depending on the model data, the resulting training images might look more 3D-like than typical drawings. However, in the end, the system still worked well on drawings despite being trained on 3D-like images.

Rendering also requires specifying the lighting in the scene. I used two light sources.

Another detail of the data generation process is that each training example consists of three images.

I do this because I have separate networks for manipulating facial features and rotating the face, and they need different training data. Note that, since the image with the rest pose does not depend on the sampled pose, we only need to render it once for each model.

I divided the models into three subsets so that I can use them to generate the training, validation, and test datasets.

While downloading the models, I organized them into folders according to the source materials. For example, models of Fate/Grand Order characters and those of Kantai Collection characters would go into different folders.

Because the origins for the characters are different, there are no overlaps between the three datasets.

The numerical breakdown of the three datasets are as follows:

| Training Set | Validation Set | Test Set | |

|---|---|---|---|

| Models | 7,881 | 79 | 72 |

| Sampled Poses | 500,000 | 10,000 | 10,000 |

| Training Set | Validation Set | Test Set | |

|---|---|---|---|

| Rest Pose Images | 7,881 | 79 | 72 |

| Expression Changed Images | 500,000 | 10,000 | 10,000 |

| Fully Posed Images | 500,000 | 10,000 | 10,000 |

| Total Number of Images | 1,007,881 | 20,079 | 20,072 |

MMD 解壓縮時,為了避免亂碼,須使用 BANDIZIP 來解壓縮 MikuMikuDance_v932x64.zip,按滑鼠右鍵,選「預覽壓縮檔」,再點選右方「字編頁」,選擇「日文」。

64bit 版 Windows MMD 需要安裝 Visual C++ 2010 SP1 可轉散發套件 (x64) 並安裝 DirectX。